Meet Zoom AI Companion, your new AI assistant!

Boost productivity and team collaboration with Zoom AI Companion, available at no additional cost with eligible paid Zoom plans.

October may be cybersecurity month, but cybersecurity awareness should be a priority every month to protect your data and personal information all year long. Check out these tips to help you develop safe online habits that can be reinforced at work and at home.

Updated on June 27, 2025

Published on April 24, 2025

Cybercriminals become more sophisticated every year and deploy multi-layered, complex attacks that target people at their most vulnerable. Hackers and scammers now infiltrate their victims with elaborate schemes designed to catch people off guard and prey on their kindness. While best practices such as using private networks, unique passwords, and locking your devices are still highly recommended, you must also choose tools and software providers that deploy robust and effective measures to help keep your data safe.

The workplace can be just as vulnerable to a breach if employees unknowingly download malware or accidentally expose private data to the wrong audiences. So with this in mind, here are a few tips to help you stay safe online when using Zoom along with insight into the security programs we deploy to protect our customer and employee data.

Whether you’re downloading the Zoom Workplace app for the first time or updating to the newest version, make sure you’re accessing it from a reputable source, such as the Zoom web portal, inside the app itself, or downloading from an official marketplace like the Apple App Store.

If you receive an email encouraging you to download the latest version of Zoom Workplace that you weren’t expecting, double check the sender domain (who the email will reply to) and hover over the download link (without clicking it) to make sure it’s a legitimate link. If it’s not to an actual Zoom domain, such as (zoom.com, zoom.us), you’ll want to avoid clicking any links.

Below are two emails that look legitimate but are actually fake.

Once you know you’re downloading Zoom Workplace from an authentic source, make sure to keep your app updated to access the latest features, fix any bugs, and experience the best audio and video quality.

There are a few steps you can take to keep your virtual event private and prevent uninvited guests from attending your meeting or webinar. To begin with, avoid using your personal meeting ID (PMI) for each new meeting. Outsiders can crash your next meeting if they have access to your PMI from an old meeting. Reserve PMIs for internal meetings with people you know.

Once your meeting begins, we recommend enabling a waiting room so that you can approve anyone trying to enter and keep others out. Only allow signed-in users to join and lock the meeting once it starts. You can also require a meeting passcode, disable video, and mute audio when attendees sign in to avoid unwanted or inappropriate conversations. We also recommend limiting the screen share and annotating functions to hosts or specific attendees only, so be sure to disable the Share Screen option, which will also turn off any annotating features.

To learn more about how to stay safe on Zoom, visit our Safety Center.

By now, most people are aware of the importance of creating complex passwords that don’t repeat across apps and devices. But to add an additional layer of protection, we offer single sign-on (SSO) and two-factor authentication integrations to make access to the Zoom Workplace app seamless, secure, and simple.

Our SSO feature allows you to use the same credentials for your organization’s identity provider so you don’t have to retain separate usernames and passwords. Two-factor authentication extends your security as a two-step sign-in process that requires submitting a one-time code generated from a mobile app or text message, in addition to the main Zoom sign-in.

Screen sharing and remote control access have become essential collaboration tools with the rise of hybrid and remote work, so it’s important to know which feature to use and when. Screen sharing allows presenters to display visual content like slides, demos and training materials, with features for hosts and participants to annotate shared screens. Remote control access enables one participant to take control of another's screen with permission, typically used for IT support, software installation, or collaborative document editing.

Both features have important security considerations. Screen-sharing risks include accidental exposure of sensitive information and potential meeting disruptions if permissions aren't properly restricted. Remote control access carries more serious risks, as it gives external users full access to your computer functions like mouse and keyboard control, which could enable malicious activities if granted to untrustworthy parties.

No one intentionally plans to hand over access to an attacker but as malicious actors become more advanced, you could unintentionally give access to the wrong person. To use these features safely, here are some recommended best practices:

When granting remote access to your computer, follow these steps to help prevent malicious activities:

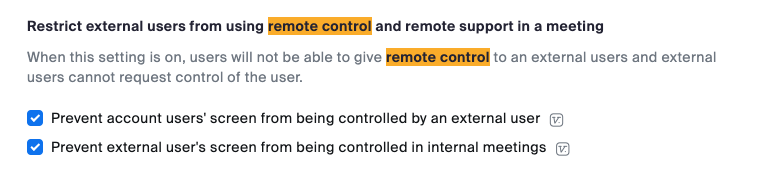

Zoom has several built-in controls designed to help you practice safe remote access, including the ability to:

You may not always know who you’re interacting with, so we developed additional features that foster greater trust and promote safer interactions when communicating with others.

Together, these features make it more difficult for bad actors to impersonate legitimate users or organizations in Zoom Team Chat, Meetings, and Webinars.

With our optional end-to-end encryption (E2EE) feature (currently available for Zoom Meetings and Zoom Phone), the cryptographic keys are known only to the participants’ devices. Enabling this feature adds an extra layer of protection to help you communicate with ease across these various Zoom solutions, although some Zoom functionality is limited.

Learn more about encryption in the Zoom Workplace platform here.

What’s more, our new post-quantum E2EE feature can help keep your data safe today and in the future. This advanced layer of encryption is designed to protect your current Zoom Meeting, Zoom Phone, and Zoom Rooms data and safeguard it against potential unprecedented threats that only quantum computers can generate.

Cybersecurity isn’t just limited to our IT or security teams. We expect all Zoomies to be cyber-aware and have developed numerous initiatives and resources to educate our employees on how to protect against cyber threats.

Our security awareness and training programs go beyond the basics and are specifically designed to create memorable employee experiences in order to reinforce important skills and knowledge all year long. Some of these activities include:

In addition to our internal employee security programs, we also oversee various initiatives within our larger communities to help us mitigate risk, foster innovation, and continue to improve our services, such as:

Achieving our many certifications and attestations requires an ongoing commitment to ensure that our products and services meet strict security and privacy standards. We are honored to have our products recognized by many of the world’s most prominent organizations and achieve certifications in areas such as:

Check out the complete list of Zoom attestations, certifications, and standards.

As the adage says, practice makes perfect. With that in mind, we’re constantly evolving our security measures and product innovations to enable our employees and customers to communicate and collaborate more freely. By providing our employees and customers with ongoing resources and education, we can envision a future with fewer cyber attacks and a safer online environment.

Stay up-to-date with all of our security and privacy news in our Trust Center.